Harness the Flexibility of Qual for Deeper Insights

![]()

Unmatched Agility

QualBoard® provides the flexibility to address any research objective, so you can run single- or multi-phase projects or take a more iterative approach with ease.

Time-Saving Automation

Powered by built-in automation and AI, QualBoard® allows you to save time, focus on the research, and jumpstart your analysis.

![]()

Powerful, User-Friendly Interface

QualBoard’s® interface and mobile-friendly design make it easy for respondents to share thoughtfully, with features to customize their experience to elicit the right insights.

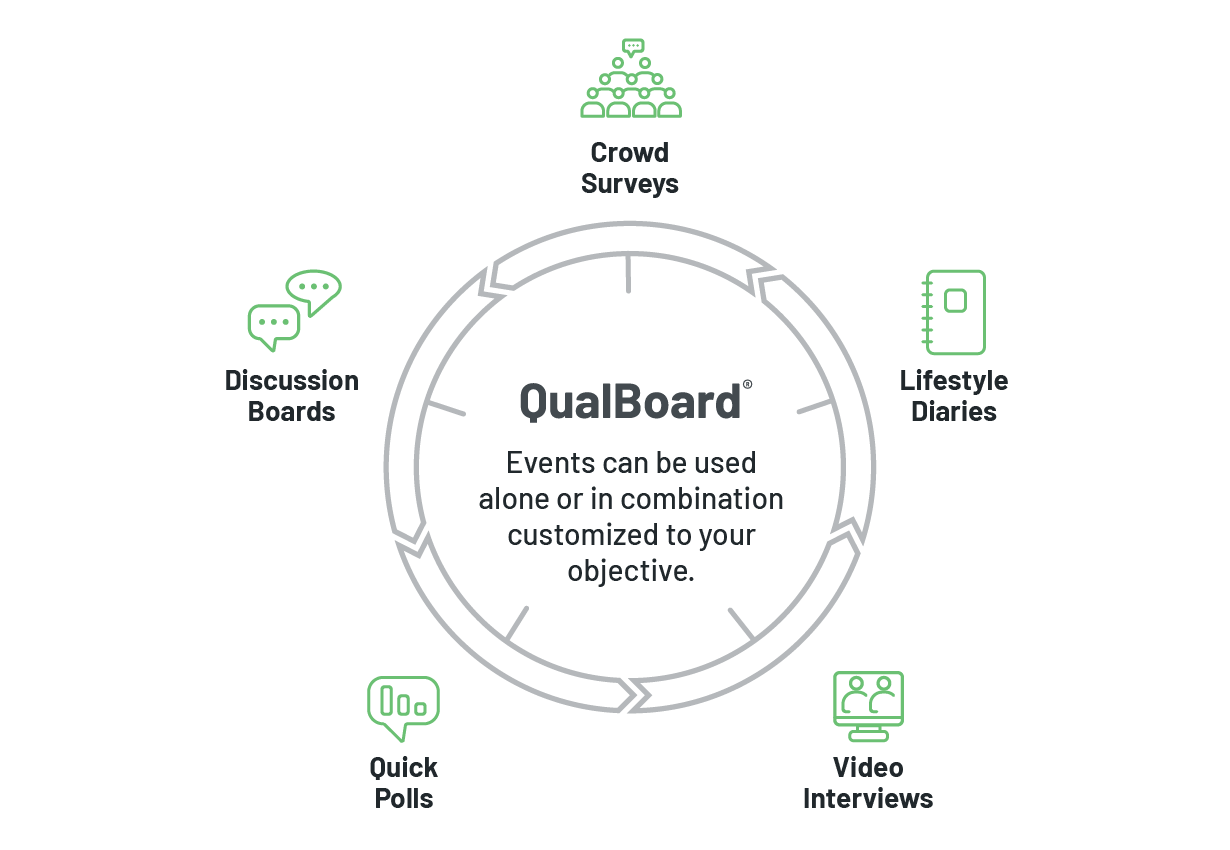

Multiple Events.

One Platform.

Tailor your QualBoard® project to any qualitative research objective with flexible event types ranging from bulletin board discussions to repetitive diaries, as well as real-time video chats, quick polls, and live crowd surveys.

Industry-Leading Technology Backed by World-Class Support

Our team is with you every step of the way to ensure your success.

Personalized

Training Sessions

Dedicated

Project Management

24/7

Technical Support

Enhanced QualBoard® Features

Take your research even further when you choose these unique QualBoard tools.

Breakout Rooms

Collaborative Pods

Create focused discussions and collaborative activities within your video interviews

QualLaborate

Concept Evaluation

Test images and elicit graphical qualitative results with our industry-leading markup tool

QualLink

Survey Intercepts

Transition qualified survey participants seamlessly into an online discussion